Text2SQL OpenSource : duckdb-nsql-7B with Ollama and LlamaIndex on local setup

Disclosure: All opinions expressed in this article are my own, and represent no one but myself and not those of my current or any previous employers.

With DuckDB becoming the talk of the town as a query engine, what could be a better experiment than trying out the hot and fresh DUCKDB-NSQL-7B from motherduck, trained using 200k DuckDB text-to-SQL pairs, synthetically generated using Mixtral-8x7B-Instruct-v0.1, guided by the DuckDB v0.9.2 documentation.

Here’s the HF repo and here are the GGUF files.

For this article, I’ll run it using ollama , which will be a breeze ! !

Steps are simple :

- Install ollama for windows and configure(model locations etc ..) it.

- Pull

DUCKDB-NSQL-7Bon local ollama - Run the wonderful LlamaIndex

NLSQLTableQueryEngineon aclassicmodelssales database and test the text2sql capabilitites of duckdb-nsql

Off we go !

Step-1 : Install and configure Ollama

Ollama for windows is on preview , but, I downloaded it from Ollama’s website and installed it anyways !

Next, in order to organize my models properly, I created two environment variables : OLLAMA_MODELS and OLLAMA_HOST . The first one for storing my models away from my C: and the second one for running the local Ollama server.

OLLAMA_MODELS=D:\ollama_models

OLLAMA_HOST=localhostI found, instructions for setting up these ENV variables are a little different for Mac, since, it involves a launchtl step, read here.

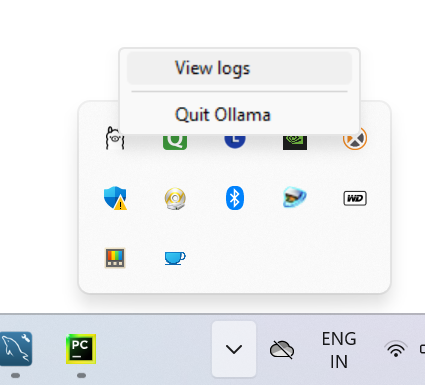

Once, installation is complete, a small Ollama icon appears on the icon tray (for Windows, and on the top bar for Mac )! Quit Ollama kills the process that runs Ollama server at defautl port 11434 and the icon goes away.

Ollama also supports a REST endpoint on the same port 11434, and all CLI commands could be run on the REST endpoint as well, read here.

Step-2 : Pull duckdb-nsql on local ollama server

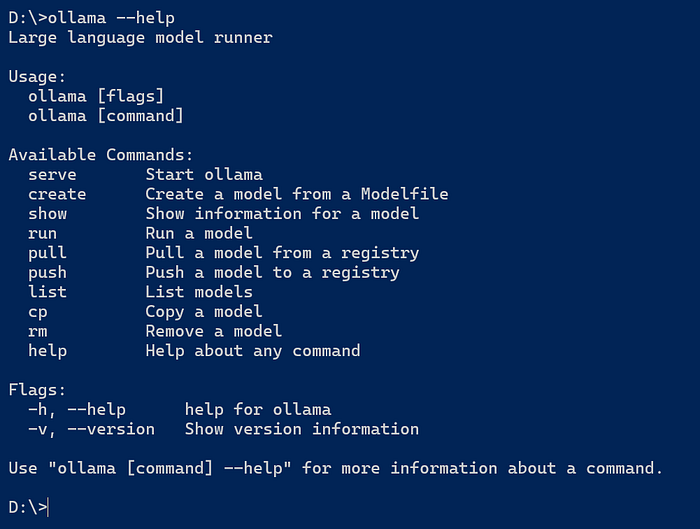

Open up a command prompt and run ollama --help , and it displays the full set of commands :

To browse and select a model at the ollama model libraray, click here.

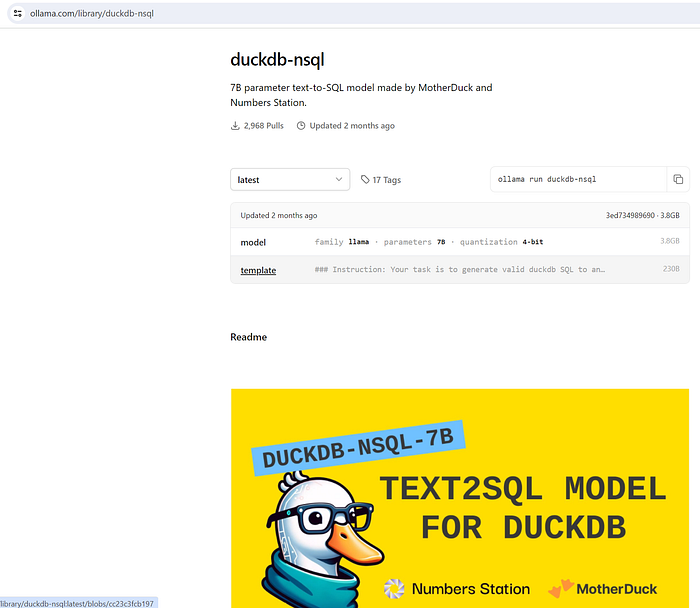

I searched for duckdb at the search prompt and got the duckdb page with instructions of how to pull the model, prompts etc ..

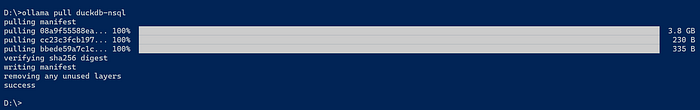

I then ran ollama pull duck-nsql and it pulled the model on my local laptop :

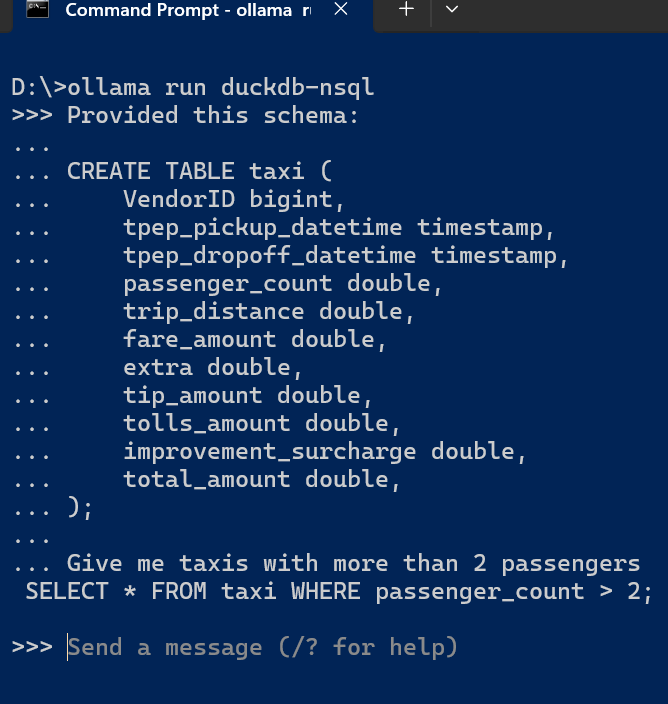

Let’s run duckdb-nsql on Ollama with the following command :

ollama run duckdb-nsql

The >>> symbol means it is ready to take a prompt. You can test it out by copying the example prompt from the Ollama duckdb-nsql page, here.

Here’s the result from duckdb-nsql :

This confirms, that duckdb-nsql text2sql model is running perfectly on my local setup.

With the local Ollama setup, I can easily bypass the following ! (I mean, for a quick experiment …. you get the drift ! ! ):

def load_llm():

# Load the locally downloaded model here

llm = CTransformers(

model = "llama-2-7b-chat.ggmlv3.q8_0.bin",

model_type="llama",

max_new_tokens = 1000,

temperature = 0.5

)

return llmStep-3 : Run duckdb-nsql on ollama to generate text2sql response

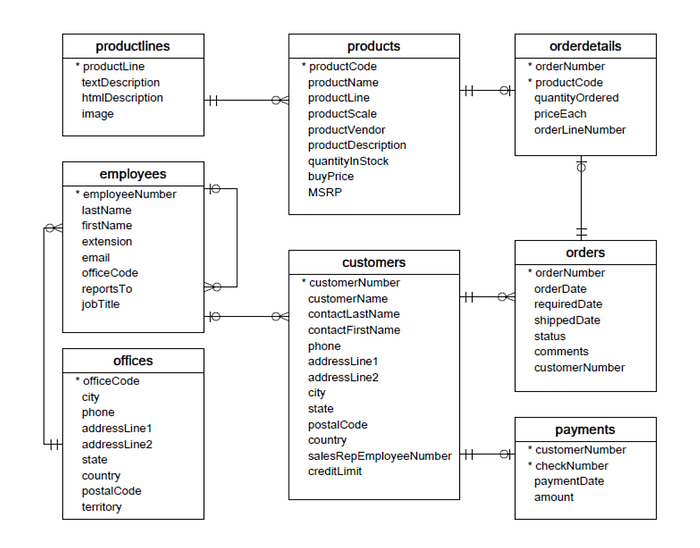

Now, that the model is running, let’s build the database. I am running a Mysql 8.0 instance and created the sample classicmodels database for mysql. I have attached both the DDL and the DML scripts to build the database in the Github repo.

I have used the free MySQL Workbench to run these scripts.

The schema of classicmodels look like this (Source : Kaggle) :

Good enough schema to test the model. Ensure that the database is up and running with the tables and data inserted correctly from the files in the Github repo.

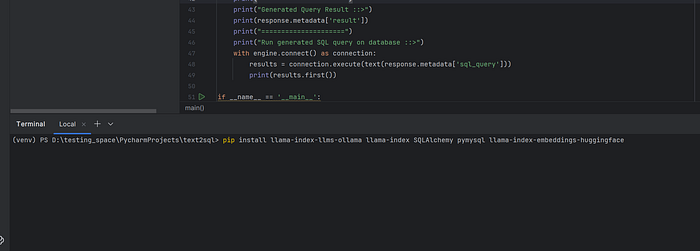

To run my program, I created a new project in Pycharm-Community Edition, which automatically created a virtualenv for my project and installed the following required libraries :

pip install llama-index-llms-ollama llama-index SQLAlchemy pymysql llama-index-embeddings-huggingface

Then, I created a db_config.py to store my DB credentials :

#db_config.py

#==============

db_user = "DB_USERNAME"

db_password = "DB_PASSWORD"

db_host = "localhost"

db_port = "3306"

db_name = "classicmodels"Finally, I created my ollama_duckdb-nsql.py to run my tests. Let’s break the code down (Github repo has the source) :

import db_config

from sqlalchemy import create_engine, text

from llama_index.llms.ollama import Ollama

from llama_index.core import SQLDatabase

from llama_index.core.query_engine import NLSQLTableQueryEngine

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.core import SettingsI chose llama-index , since, it has a built-in NLSQLTableQueryEngine class with great support for connecting to structured data / SQL databases and run natural language queries.

Created a get_connection to setup the DB connection reference :

def get_connection():

return create_engine(

url="mysql+pymysql://{0}:{1}@{2}:{3}/{4}".format(

db_config.db_user, db_config.db_password, db_config.db_host, db_config.db_port, db_config.db_name

)

)Instantiated the llm variable from the LlamaIndex Ollama module with the duckdb-nsql model :

def main():

llm = Ollama(model="duckdb-nsql", request_timeout=30.0)

print("Selected Model :: ", llm.model)

print("=====================")Added the local HF embedding which LlamaIndex will need to embed any queries you make using the query engine later on :

Settings.embed_model = HuggingFaceEmbedding(

model_name="BAAI/bge-small-en-v1.5"

)Created mysql database engine and a list of all tables in the database :

engine = get_connection()

db_tables = ["customers","employees",

"offices","orderdetails",

"orders","payments",

"productlines","products"]Created the LlamaIndex query-engine , which will route the invocation to the duckdb-nsql model. Then the model will generate SQL query from English language and LlamaIndex will return the result along with metadata. The llm variable is the one created earlier with duckdb-nsql model running locally on my Ollama server.

sql_database = SQLDatabase(engine, include_tables=db_tables)

query_engine = NLSQLTableQueryEngine(sql_database=sql_database,

tables=db_tables,

llm=llm)All set ! Let’s test now with the text2sql generation as well as running the generated SQL query on the database table as well :

query_str = "How many customers in Las Vegas ?"

#query_str = "Find customers with no orders"

response = query_engine.query(query_str)

print("Generated Query ::>")

print(response.metadata['sql_query'])

print("=====================")

print("Generated Query Result ::>")

print(response.metadata['result'])

print("=====================")

print("Run generated SQL query on database ::>")

with engine.connect() as connection:

results = connection.execute(text(response.metadata['sql_query']))

print(results.first())query_str = "How many customers in Las Vegas ?"Response :

D:\testing_space\PycharmProjects\text2sql\venv\Scripts\python.exe D:\testing_space\PycharmProjects\text2sql\ollama_duckdb-nsql.py

Selected Model :: duckdb-nsql

=====================

Generated Query ::>

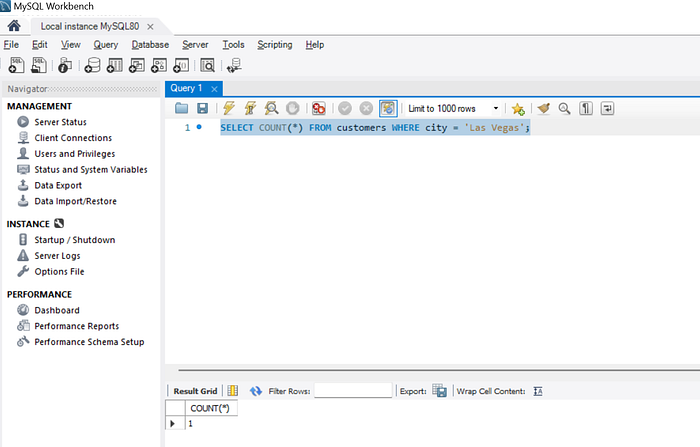

SELECT COUNT(*) FROM customers WHERE city = 'Las Vegas';

=====================

Generated Query Result ::>

[(1,)]

=====================

Run generated SQL query on database ::>

(1,)It not only generates the query, but, also adds the result !

Let’s run the generated query and verify from the database :

Let’s push it up a notch with a LEFT JOIN :

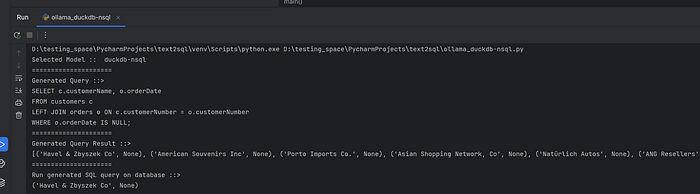

query_str = "Find customers with no orders"Response :

D:\testing_space\PycharmProjects\text2sql\venv\Scripts\python.exe D:\testing_space\PycharmProjects\text2sql\ollama_duckdb-nsql.py

Selected Model :: duckdb-nsql

=====================

Generated Query ::>

SELECT c.customerName, o.orderDate

FROM customers c

LEFT JOIN orders o ON c.customerNumber = o.customerNumber

WHERE o.orderDate IS NULL;

=====================

Generated Query Result ::>

[('Havel & Zbyszek Co', None), ('American Souvenirs Inc', None), ('Porto Imports Co.', None), ('Asian Shopping Network, Co', None), ('Natürlich Autos', None), ('ANG Resellers', None), ('Messner Shopping Network', None), ('Franken Gifts, Co', None), ('BG&E Collectables', None), ('Schuyler Imports', None), ('Der Hund Imports', None), ('Cramer Spezialitäten, Ltd', None), ('Asian Treasures, Inc.', None), ('SAR Distributors, Co', None), ('Kommission Auto', None), ('Lisboa Souveniers, Inc', None), ('Precious Collectables', None), ('Stuttgart Collectable Exchange', None), ('Feuer Online Stores, Inc', None), ('Warburg Exchange', None), ('Anton Designs, Ltd.', None), ('Mit Vergnügen & Co.', None), ('Kremlin Collectables, Co.', None), ('Raanan Stores, Inc', None)]

=====================

Run generated SQL query on database ::>

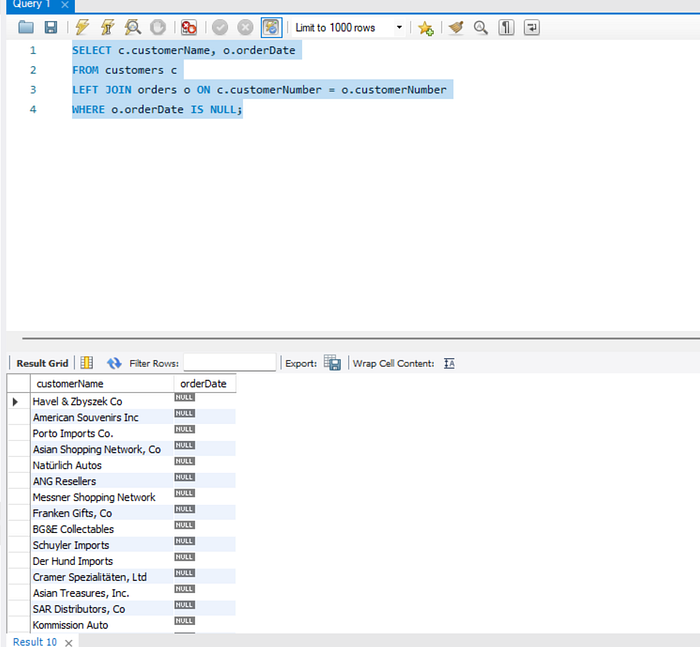

('Havel & Zbyszek Co', None)Although it is correct, I wonder why didn’t it generate WHERE o.orderNumber IS NULL ! Anyway, Let’s validate in the database :

On target again !

Let’s test an aggregation :

query_str = "Find number of orders for each status."Response :

Selected Model :: duckdb-nsql

=====================

Generated Query ::>

SELECT status, COUNT(*)

FROM orders

GROUP BY status;

=====================

Generated Query Result ::>

[('Shipped', 303), ('Resolved', 4), ('Cancelled', 6), ('On Hold', 4), ('Disputed', 3), ('In Process', 6)]

=====================

Run generated SQL query on database ::>

('Shipped', 303)As a DuckDB fan, most of the results were satisfactory !

I understand it is not perfect, you would find complex subquery generation is not perfect ! But, to aid automation of data analysis use-cases, models like these are extremely helpful !

Code and db scripts in repo !

So long and happy coding !